Happy Wednesday, fellow AI adventurers!

Welcome to another exciting edition of our weekly AI adventure.

We’ve sprinkled a dash of fun and a pinch of wit into our AI exploration, so prepare to be simultaneously educated and entertained.

AI, Hands Off Our Content! Publishers Gear Up to Protect Their Articles

Did you hear the news? When no one was looking, AI went on a feeding frenzy and devoured the entire internet! And guess what? The creators of all that internet goodness are in a bit of a panic. After all, more people talking to chatbots means fewer people visiting their sites, which means less money in their pockets. So, what can they do about it? Let’s take a look at their options:

1. They could gather their forces and go for the big lawsuit. A group of publishers, including big names like Axel Springer, News Corp, and IAC, are itching to sue those tech companies for a hefty sum of cash.

2. Another option is to negotiate for compensation. The New York Times actually left the publisher coalition, which got people wondering if they’re planning to strike a deal with Big Tech on their own, following in the footsteps of AP News.

3. Some publishers are trying a different approach: they want to make it flat-out illegal. The New York Times has updated its Terms of Service to warn against data scraping. Translation: stay away from our stuff or face the consequences!

4. A more direct way to keep AI out of their content is to put up paywalls. It’s effective, sure, but it means fewer people get to see their stuff overall. Tough decision!

5. And for those who want to get really technical, OpenAI has provided some code that can block their web scraper from accessing your content. A handy option if you’re tech-savvy.

Now, you might be wondering why all this matters. Well, the truth is, the damage is pretty much done. AI has already had its fill of the internet buffet. So now the focus is on whether publishers will actually sue and get some compensation. It’s likely they’ll go after the big fish. And can publishers protect their freshly published content? That’s a bit uncertain. Our advice is to start with option #5 and go from there. Better safe than sorry!

Treats To Not Try

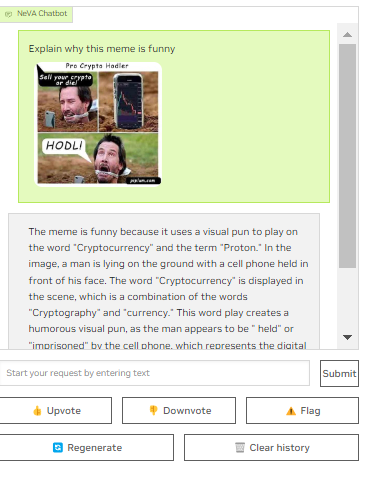

Nvidia released an AI model called NeVA that can “see” images and respond to questions using GPT-4.

We tested it with the meme from yesterday, and the AI couldn’t explain the humor behind the meme.

FraudGPT: The Next Generation of AI Tool Ready to Cause Online Mayhem!

Guess what? The dark side has jumped on the AI bandwagon, and it was bound to happen sooner or later. Brace yourself, because there are some new AI tools causing a stir in the shadiest corners of the dark web.

FraudGPT and WormGPT, the troublemakers of the month, are making quite the buzz. Netenrich, a cloud security company, spilled the beans on what FraudGPT can actually do. Brace yourself, because this AI mischief-maker can do some serious damage:

It can write malicious code. Yup, it’s like a virtual criminal mastermind.

It can create sneaky malware that’s virtually undetectable. Sneaky indeed.

It’s even capable of crafting convincing phishing pages.

Oh, and it can cook up some wicked hacking tools too.

And that’s just the tip of the iceberg! To get their hands on FraudGPT, wannabe bad guys need to shell out a pretty penny. We’re talking a subscription price starting at $200 per month, all the way up to a whopping $1700.

But hey, it’s not all doom and gloom. According to Sven Krasser, chief scientist and senior VP at Crowdstrike, these AI tools might increase the quantity of attacks, but the quality remains the same. So, in a way, they’re not really pushing the envelope. They’re just making it easier for less skilled hackers to cause chaos. Silver lining?

Now, here’s some reassurance: Tese generative AI tools are nowhere near as sophisticated as the ones secretly held by governments around the world.

Don’t forget to check us out on Wednesdays for all the latest AI news – because why limit yourself to just one kind of intelligence?